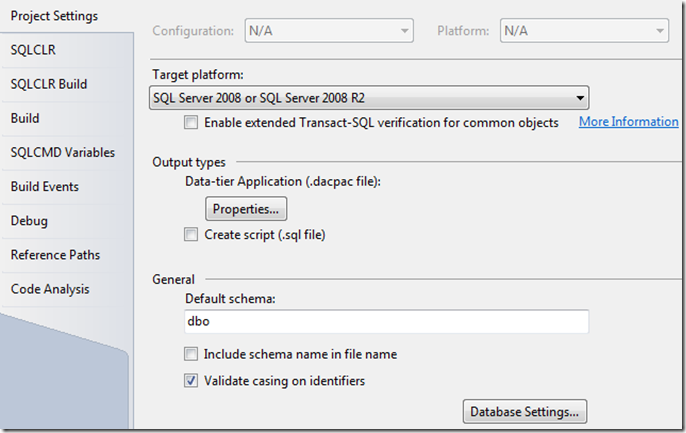

To set the properties for your SQL Project, right-click the Project and select Properties. You'll see a window containing several tabs to define your project.

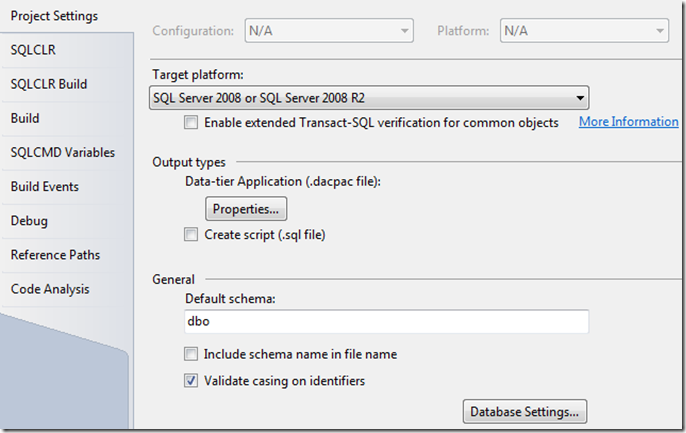

Project Settings

Here you can set your normal target platform (SQL 2005, 2008, 2012, or Azure).

You can enable the T-SQL verification option, but this is mostly for Azure projects.

If you will generally output to a script, choose the "Create script" option. On the whole, just using the dacpac file will likely provide greater flexibility in the long run as it can be adapted to a different target. Generating a script will only be guaranteed to work against the target used to generate the script.

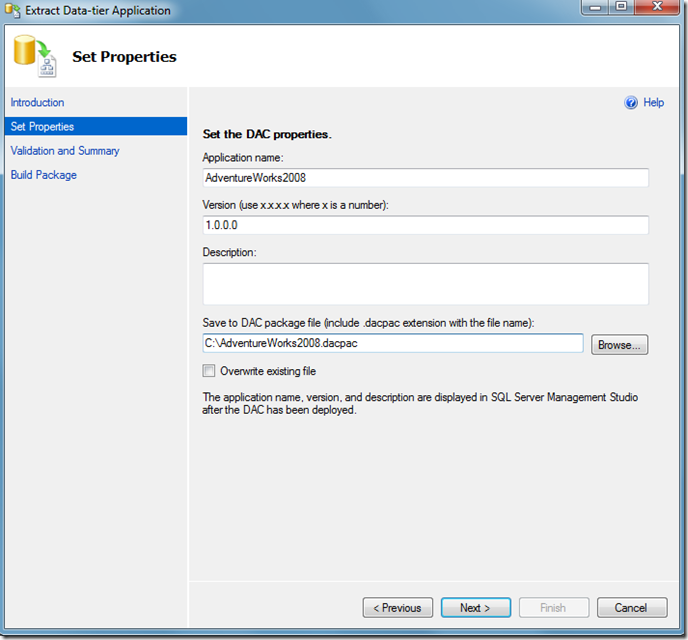

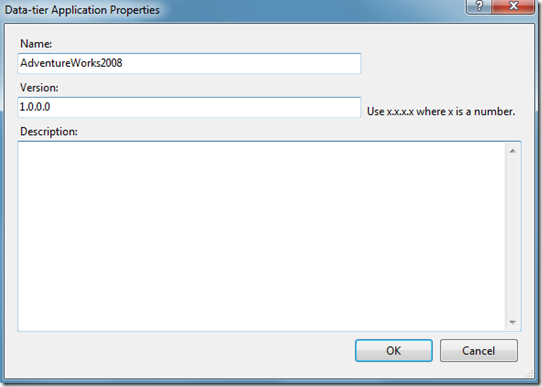

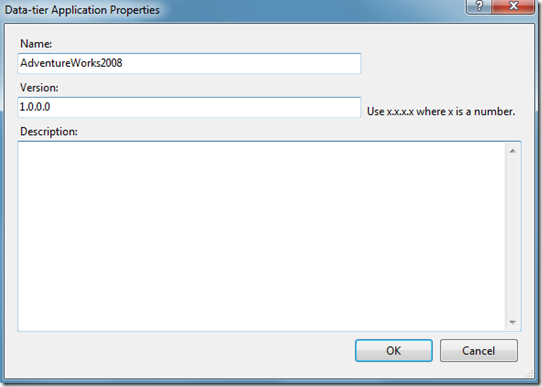

You can also set the dacpac file properties, which sadly do not do much when used against a database not using these as data-tier applications. There is some talk of enhancing the projects to use this version number and description somewhere within the database, but right now they are pretty much only there for you to populate if you wish.

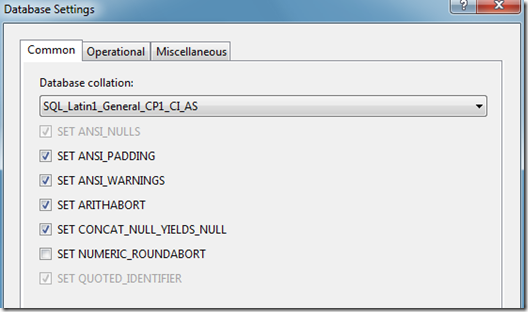

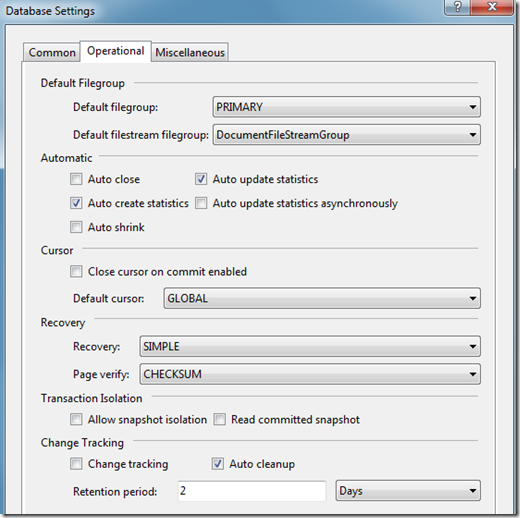

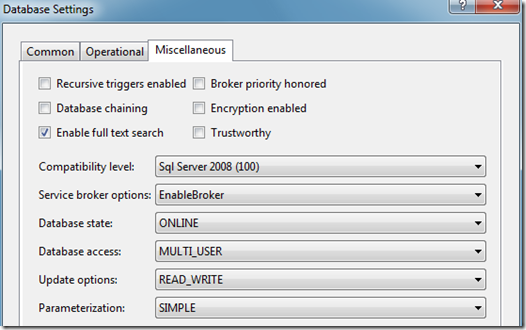

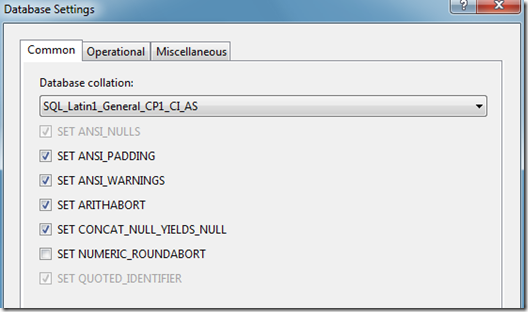

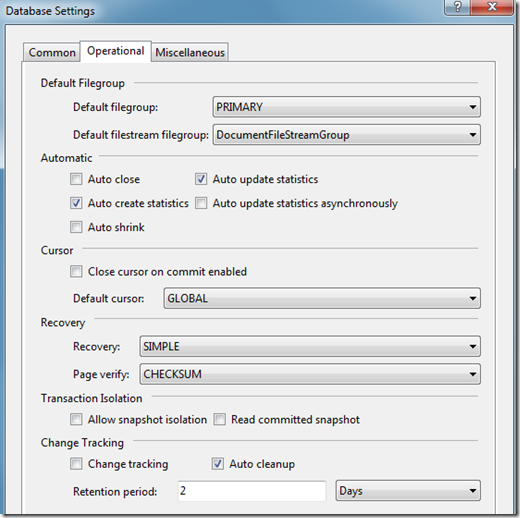

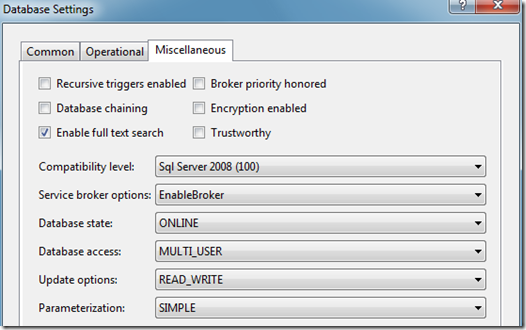

You can also set the Database Settings for your project. These options are the normal ones you would set when setting options in SQL Server. You can choose to publish these to your targets so set these to your preferences. (More on that in a future post.)

I'm going to bypass the SQLCLR and SQLCLR Build tabs as I am not familiar with them and don’t currently use either in our system.

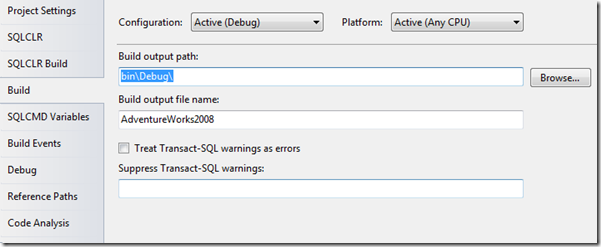

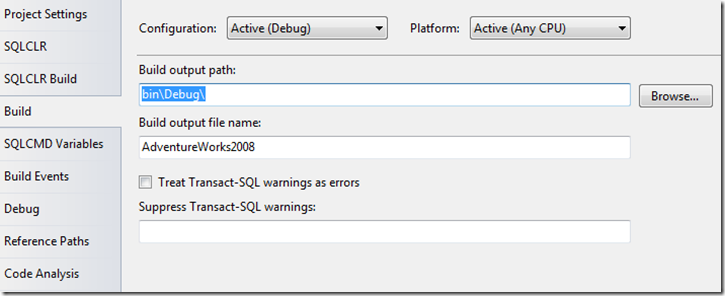

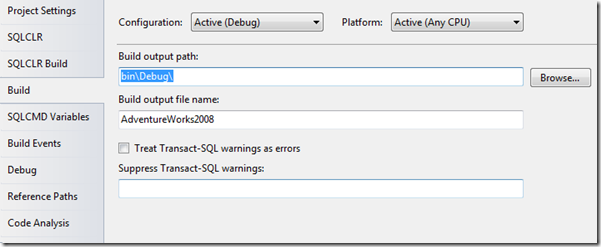

Build

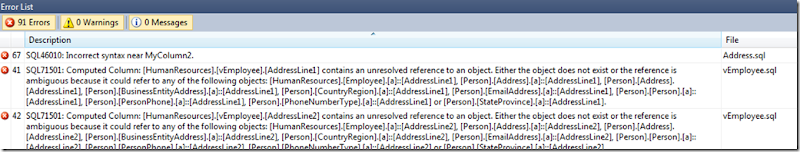

The Build tab is the place to specify which warnings to ignore, the output path for your files when you build the project, and the name of the file. You may want to specify certain warnings to ignore, especially if you use cross-database projects or any references to system databases. Those can often result in warnings about missing objects, even though they'll work perfectly fine when you publish them.

- Note that the warnings are set per configuration. If you use multiple configurations be sure to set the warnings in each one.

![clip_image006[1] clip_image006[1]](http://lh5.ggpht.com/-8KLLzDTUm5Q/UIsXCbzQ1NI/AAAAAAAAHHk/6AXGgOVQsw4/clip_image006%25255B1%25255D_thumb%25255B2%25255D.png?imgmax=800) SQLCMD Variables

SQLCMD Variables

SQLCMD variables can be used throughout the project as placeholders for values passed in at publication time. For example, you may choose to change the way your scripts run based on your Development, Customer Acceptance, or Production environments. Define these variables here and use them wherever applicable in the project by inserting them as $(DeployType). SSDT will substitute them with the proper value on publication.

Build Events

Build Events

Build events can be set up to run certain commands before and after the project is built. You can also choose to run the Post-Build event commands only when the project builds successfully or on every build.

Debug

Start Action and Target Connection String should make sense for those who need to change them. One item to note is that the debug action defaults to a SQL Express type instance of your database that is released to (localhost)\DBName. This will run within SSDT so you can try out your T-SQL before committing the changes in the project.

** Note that if you use FILESTREAM, you will not be able to use these user instances. You will need to change your debug database to a SQL Server install.

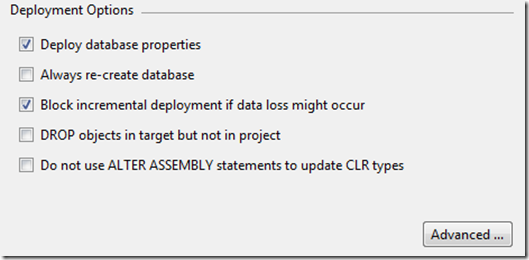

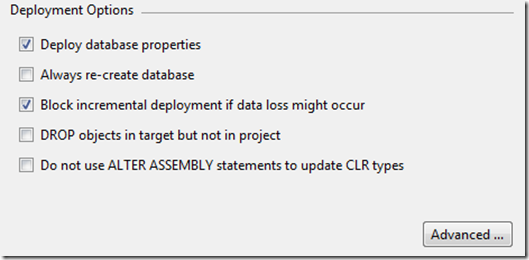

In your Deployment Options, you have a screen that looks something like this:

"Block incremental deployment" is one to be aware of. If you run into any constraints, refactoring, data type changes, NULL to NOT NULL, or similar changes, you could have your debug action stop unexpectedly. It can be really useful to avoid dropping columns that could contain data, but if you are aware of the changes, this option could also get in the way of normal releases. According to the MS documentation, this will only block the change if there is data in the table.

"DROP Objects in target but not in project" can be useful to make sure that everyone is running with what's in the project and that they don't have leftover objects that could interfere with testing. However, it's also possible to lose a bunch of work if you publish a project with this option against your local instance containing the stored procedure you've worked on for the last 2 weeks.

"Advanced" contains a lot of different options about what to compare and how to generate the changes. Some of the more interesting options include:

- Allow incompatible platform - this will let you push your changes to a version of SQL Server other than the one specified in the project properties. The changes will fail if you try to do something not allowed in the target, but useful in case you have your project set to the lowest version supported.

- "Drop xyz not in source" - these options control what will be dropped from the target if it's not defined in the source. The default options are likely good for most users.

- "Ignore xyz" - defines what should be ignored when comparing the project against the target. You may want to ignore details about the partition schemes, or what permissions are set in the target

- "Include Transactional Scripts" will set up the release script as transactions when publishing the database. This is useful when you want to make sure that if one part of the publish action fails, the change script is rolled back.

- "Verify Deployment" - stops the publish actions if the script could run into some problems.

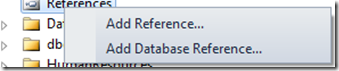

Reference Paths

This section is used to modify and maintain the various Server & Database variables used with cross-database references. See the "External Database References" page for more detail.

Code Analysis

This option can be useful to check for common issues such as using reserved words, non-standard characters in object names, or using "select *" in your queries. You can also specify whether to show these as errors or warnings. Set appropriately for your environment.

![clip_image006[1] clip_image006[1]](http://lh5.ggpht.com/-8KLLzDTUm5Q/UIsXCbzQ1NI/AAAAAAAAHHk/6AXGgOVQsw4/clip_image006%25255B1%25255D_thumb%25255B2%25255D.png?imgmax=800)